The Burroughs B5900 and E-Mode

A bridge to 21st Century Computing

By Jack Allweiss

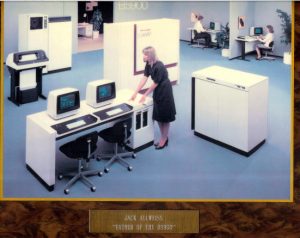

My name is Jack A. Allweiss, also known as “The Father of the B5900 System”. I did not give myself that title, my friends and co-workers at Burroughs Corporation did, and I consider it a great honor. This true story is about the B5900, and why it was an important milestone for Burroughs and later Unisys, as well as the computer industry in general.

40th Anniversary Forward

January 2018 is the 40th anniversary of my proposal to Erv Hauck, Bob Merrill, and the management of the Burroughs Large System Plant in Mission Viejo, California for an Entry Level System, eventually to be called the B5900. That machine was the predecessor of the entire E-mode architecture line that Unisys still sells today, and that is used by many major corporations and governments around the world. It seems like only yesterday to me, but forty years is a long time. I was thinking, what was technology like in 1938 compared to 1978, the same 40-year interval? Looking back, it was quite different! In 1938 Locomotives were still driven by steam and Planes were driven by propellers. I wanted to try to quantify the difference in computer processors of my era to the ones of today with a focus on architecture. Explore what is very different, and what is amazingly similar.

A little over a year ago I took a graduate course in microprocessor architecture at University of California Irvine. My goal was to see what had changed in forty years. I found it interesting that the Professor spend almost half the semester studying computer architectures from the sixties and seventies. In the second half of the class I found out why. The basis of the dominant modern microprocessor, the ARM processor which is at the core of almost every smartphone in the world (yes, even Apple uses that processor architecture), shares many of the characteristics of these early computer architectures. The fact is that a computer processor designer from the seventies would feel very comfortable working on these new processor designs.

So, not much has changed? Not true, a lot has changed. What has changed is that the constraints that I and my fellow designers worked under have been, to a great extent, lifted. The result is that the best of all that was learned from the 1940’s to the 1990’s can be exploited with virtually no cost. Because of cost constraints, the constraints of physics, and limited design tools, designers of systems like the B5900 or the IBM370 in 1978 had to constantly make major tradeoffs. An example: an important part of the B5900 performance was the Lambda/Delta register hardware. If cost and space were not a constraint having many of these registers would significantly enhance performance. The problem was the registers were expensive, and the more you had of these the more expensive they were. We ended up with only four registers which let us meet our performance goal. If I were to develop the B5900 in 2018 using modern microprocessor hardware technology, I could have as many as I wanted, and at no real cost. Why? Modern semiconductor technology can pack so many transistors on a chip that designers by the end of the 20th century ran out of things to do with them! They had so much space left over on a chip that they could pack duplicate processors on the same chip. The results have been stunning. A mainframe computer of the late 1978 has only a small fraction of the processing power of an average smartphone. Does the smartphone have a magic processor architecture? No, the ARM processor is actually a very simple RISC load/store architecture, but designers have exploited pipelining, register remapping and other techniques to significantly enhance performance. The best techniques from Honeywell, Control Data Systems, IBM, Burroughs, GE and other designs of the past have all been incorporated to squeeze every possible bit of performance out of this very basic architecture. Then, using advanced memory architectures such as Burroughs Global Memory like structures and advanced software they add two or four or eight processors to the mix to share the workload. Finally, because all this can fit on a single piece of silicon (including cache memory and special purpose processors for functions such as graphics) the speed at which the processor can operate is orders of magnitude faster than a machine that must send signals to different physical cabinets long distances away.

I know what you must be thinking: “computer processor design seems to have become kind of boring, no real architecture changes in forty years!” Again, this is not really the case. Architecture has changed on two major fronts, one is digital, and the other Quantum.

You would not be wrong if you declared that almost all the advance in digital computers in the last twenty or thirty years has been in software. The Hugh leaps in performance, storage, and cost reduction has made it possible to build software that significantly enhanced what computers can do. At the same time, we also put new demands on our processors. In 1978 computers were connected to the power grid. Power was unlimited. If they got hot, you put them in an air-conditioned room, or water cooled them. Yes, some special application’s required computers outside the controlled environment of the computer room but that was the exception not the rule back then. Fast forward to today, 90% of processors run on a battery, power is limited, there is no air conditioning or special environment. These battery powered computers must operate for many hours, days, or even years between charging. Power use has become the major challenge of today’s processor designers and that forms an architecture challenge. It is not a small challenge. A computer in 2018 is thousands of times more powerful than the mainframes of 1978, but they must run on a tiny battery! In the last ten years amazing advances in exploiting specialized multi-core processors and advanced operating systems have allowed this transformation to small, energy efficient powerful processors to happen.

Finally, computer architects are throwing everything we know about design out the window to develop the new generation of Quantum computers. Like the very early machines, these are large and power-hungry beasts and they don’t yet work very well! But that is always the way it starts. It would be so great to see where the Quantum computer revolution in computer architecture takes us forty years in the future!

Jack Allweiss

January 2018